My Solution to the Cloud Resume Challenge

So during Christmas 2022, I came across this challenge on the Azure reddit board I frequent. Known as the Cloud Resume Challenge, it throws the gauntlet down to aspiring Cloud Engineers (and full fledged ones too if they are so inclined) to build a one page resume site. For the Europeans in the audience like myself, a resume is a CV. Anyway its a bit more complicated than just sticking your resume/CV up as doc file on some file storage service and calling it a day.

My Azure Serverless CV here - https://cv.richardcoffey.com/

The stipulations to successfully complete the challenge, is that you have to display your CV as a HTML/CSS site on a static distribution frontend of some sort and then have a few backend serverless processes going on mainly to display a visitor site counter on your CV. You also have to employ some DevOps processes to all this to bring it together in the end. This can be done on whatever cloud of your choosing. I of course choose Azure and got to work and produced this - https://cv.richardcoffey.com/

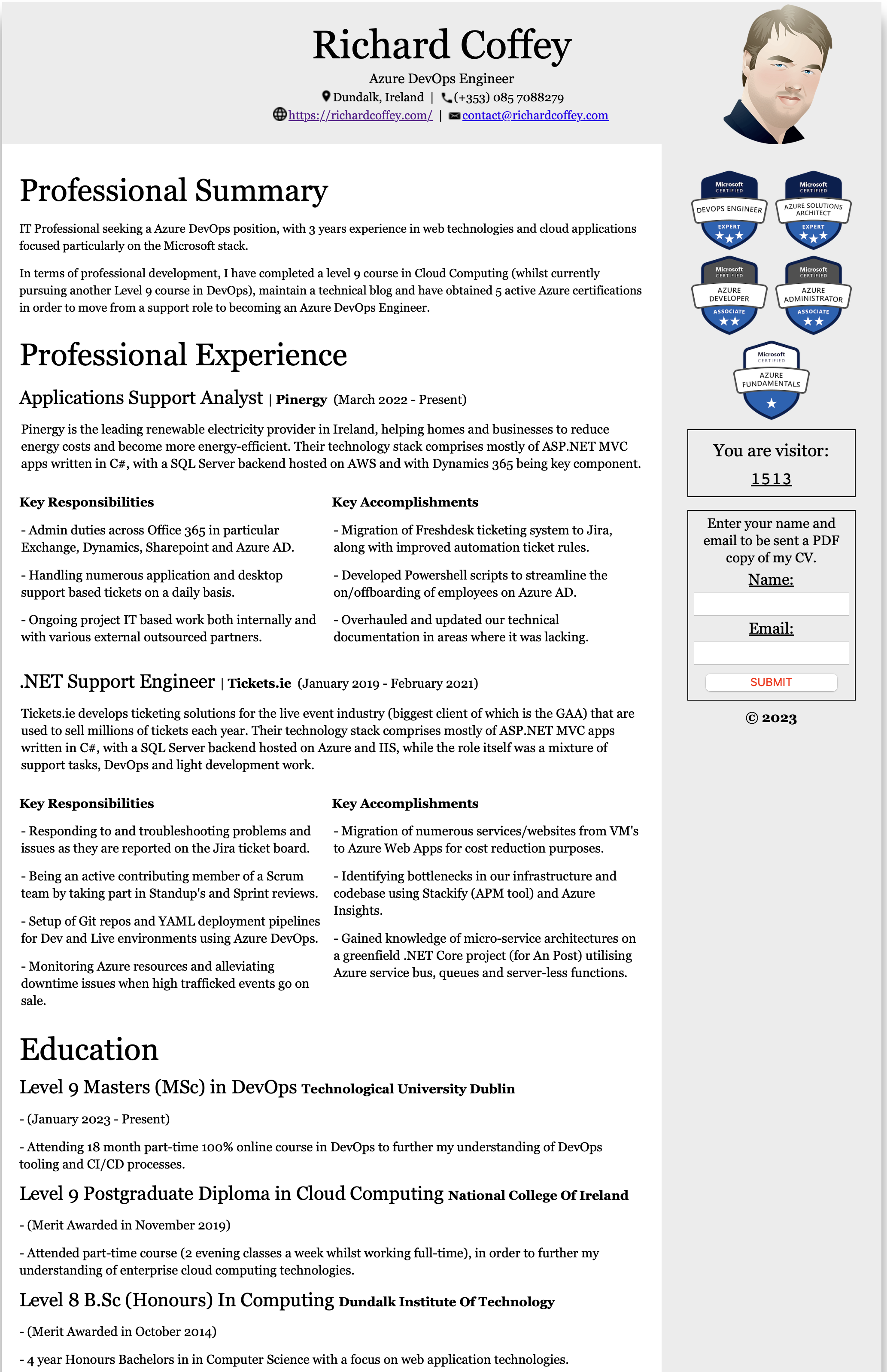

From the activity on the reddit board and the discord server for the community of cloud engineers behind this challenge, it seems quite popular as a way for anyone who wants to get into the cloud or devops fields to give this challenge a try and demonstrate some real life technical skills, so I gave it whirl and below is the solution diagram I came up with initially before I began composing the project in code.

Azure Architecture Diagram of Serverless CV app. Github repos - https://github.com/richard-coffey?tab=repositories

I split the project up into three different Git Repos with pipelines for each on Azure DevOps - one for the frontend code (HTML/CSS), one for the backend code (C# for the Azure Functions) and the last one for the Infrastructure as Code (Bicep). There are of course arguments to be made about how you should structure the Git repos for your micro service type apps but this is what I went with.

Also to make it a bit more interesting for myself I added an additional task to do that wasn’t in the normal challenge - a email submission form were someone can enter their name and email, and be emailed a copy of my CV in PDF form. This and the aforementioned site visitor counter would comprise the backend functionality of the serverless app.

Frontend

For the frontend I choose to host it using Azure Static Web Apps. This is a relatively new service only introduced in 2021 (and not to be confused with the similarly titled Azure Storage Static Sites). They come with built in CDN, SSL, custom domains and tight integration with Azure DevOps/Github Actions all on the free plan and is a great way to host your static web content and plug your API’s in after the fact to build a dynamic and scalable frontend.

The HTML/CSS I used was taken from a template I found and tweaked a bit. The focus of this challenge is not on frontend design skills so this is a totally acceptable to do. Three Javascript API calls were also included in the code - 2 PUT requests (to increase the visitor counter by one everytime someone visits the page and another to send the form submission) and 1 GET request (to get the current visitor counter). I used a custom sub-domain (cv.richardcoffey.com) and the integration between Static Web Apps and the Azure DNS made this a seemless process to get up and running.

Backend

The serverless aspect for this app is mainly driven by the use of Azure Functions, little pieces of event driven code that run on a pay as you go consumption pricing model. For persistent storage I choose CosmosDB, a NOSQL globally distributed database which can be run on a Consumption plan for very little cost for my purposes. I should mention here that all coding was done locally using VS Code and with the right extensions it works really well for both writing and testing Azure Functions.

There is a way to expose the Azure Functions directly as API endpoints but as I am trying to build this app using best practices and thinking of scenarios of scale, I decided to feed the frontend facing Azure Functions into a Azure API Management service. From here I can apply policies to the API’s such as caching and rate limiting and rename them with nice frontend facing URL’s. And of course too Azure API Management has its own App Insights (as do the Azure Functions) that can be fed into Azure Monitor for observability purposes (can’t get enough of those stats!). The frontend facing Azure Functions are operating as HTTP Triggers listening for someone to open the page or to click the form submission button. I did have a problem at first with getting my API’s working on the frontend but that was until I realised I needed to enable a CORS policy on my Azure API Management service.

Now again once these functions are triggered I could have sent them directly into the CosmosDB database to be stored but I decided on another intermediate step in between, which I believe is good practice in terms of scaling real life applications. That middle man is an Azure storage queue service that takes the messages from the Azure Functions (the site counter value and the form submission) and puts it on a queue. Another Azure Function sitting behind the Queue Storage is then triggered and it then in turn takes the message and puts it in the CosmosDB database. Not exposing your database to your API’s and instead having some sort of queuing service I believe is important in terms of scaling and reliability. I could have gone with Azure Service Bus here but that’s a more expensive solution than Queue Storage.

From here sitting behind the CosmosDB, we have two final Azure Functions. The first one is on a CosmosDB trigger which calls a SendGrid service (I needed to get a API key from SendGrid) and which then sends out an email with my PDF CV attached to it when someone submits the form. The other Azure Function is on a HTTP trigger listening for someone to open the page and then retrieves the counter value from the CosmosDB and displays it (before first going back through the Azure API Management service).

The final thing to say in this backend section is I used best practices again when it came to storing the connection strings (for the Queue Storage and CosmosDB) and Sendgrid API key that are required by the various Azure Functions. That would be in the Azure Key Vault. It’s a very common security consideration for developers to know to never store sensitive information like connection strings in code. Instead the code has variables pointing to the app configuration settings for the Azure Functions which in turn point to the secrets stored in the Azure Key Vault. The Azure Functions also need to be given system assigned Managed Identity access to the Key Vault to be able to access it. Also by storing Key Vault references in the app settings this allows you to easily replace those Key Vault references in your variable group settings in Azure DevOps when you run the pipeline if you so wish.

Infrastructure

From the beginning of the project I practiced good IAC principles by creating all my Azure resources not from the portal but by using Bicep, which is a new DSL from Microsoft that offers a more human readable version of ARM templates and which I greatly prefer using than ARM. With the right VS Code extension that allows you to write Bicep code quite quickly I was able to provision all the resources as I needed them. I also parametrised a few important values such as resource group name (that can be changed in the variable group upon release in Azure DevOps). Bicep also offers one really cool feature lacking in ARM, the ability to modulirize your Bicep files into smaller Bicep files that are more readable but also importantly reusable in other projects if needed.

From here then I needed to write the three YAML files for each of my three pipelines for this project. I’m not doing anything too crazy here, the frontend and infrastructure pipelines just build and deploy what’s there upon a push to the main branch. The backend pipeline has an additional stage for testing. I wrote individual unit tests in NUnit for each Azure Function. These obviously need to pass before deployment can occur. Varibles from the variable group I setup also get imported into the YAML files allowing you to switch different variable values in and out quickly. In a real life production scenario, I would have to have releases into development, testing and production (and also possibly some deployment strategies like canary or blue/green) specified in these YAML files.

All the Azure resources for my Serverless CV solution.